As part of a larger series of posts, this lab is to highlights the role that the CPU plays in processing large file transfers. Moving a 10 GB chunk of data is greatly affected by the number of files that make up that 10 GB. Each time the data is split the CPU has to build up and tear down a transfer session.

An easy way to visualize this is to imagine handing someone else 100 dollars. You can only hand the other person one bill at a time. The time it takes to hand one $100 bill is much shorter than handing a $1 bill over 100 times. That 100 times more work. This is the same with data transfers. One big data chunk is no issue, thousands of smaller chunks is a lot more time.

Lab Configuration

To demonstrate the impact I am going to move a single 10 GB file between two systems, then compare that to moving 1024 x 10 MB files. The total data being moved is 10 GB. I have set up two new VMs; ST-TEST01 and ST-TEST02. Both VMs are running on SSD and data transferred will be on the same discs as the OS.

The VM ST-TEST01 is Windows Server 2016 running IIS and with FTP setup. This server will have data uploaded to it from ST-TEST02 to ST-TEST01. I choose to do FTP for this lab because the protocol has the least overhead compared to other protocols. The VM ST-TEST02 is running Windows Server 2016 and have installed Filezilla to do the file transfer.

Using a third-party tool called “File Generator”, made by soft.tahionic.com, I generated the test files that will be moved between systems. This tool allows you to create any number of files with any size, filled with random data. With it, I created 1 x 10 GB file and 1024 x 10 MB files stored on ST-TEST02 at “C:\FileLab\”.

Transfer Tests

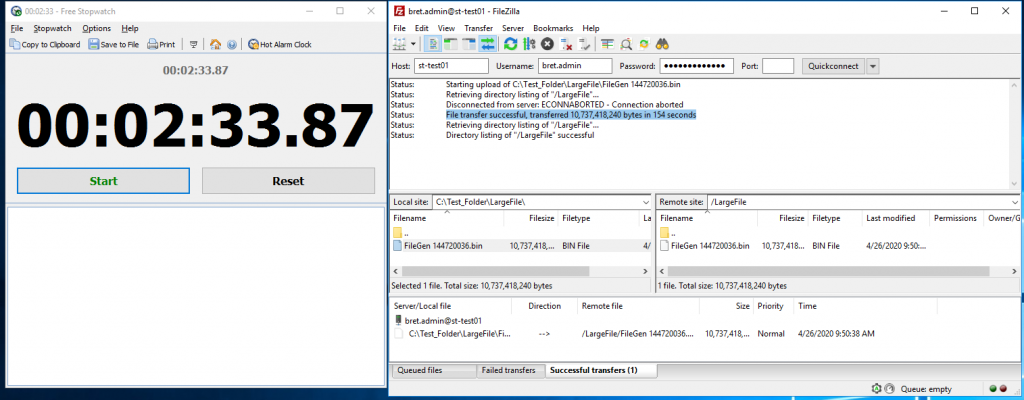

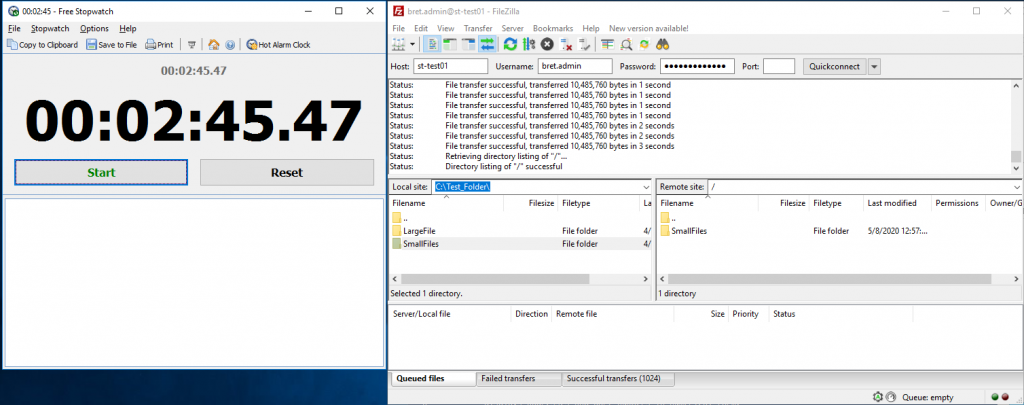

Now I have the data set ready I start by transferring the single 10 GB file first. FileZilla on ST-TEST02 will initiate the transfer and is configured to only use one data stream.

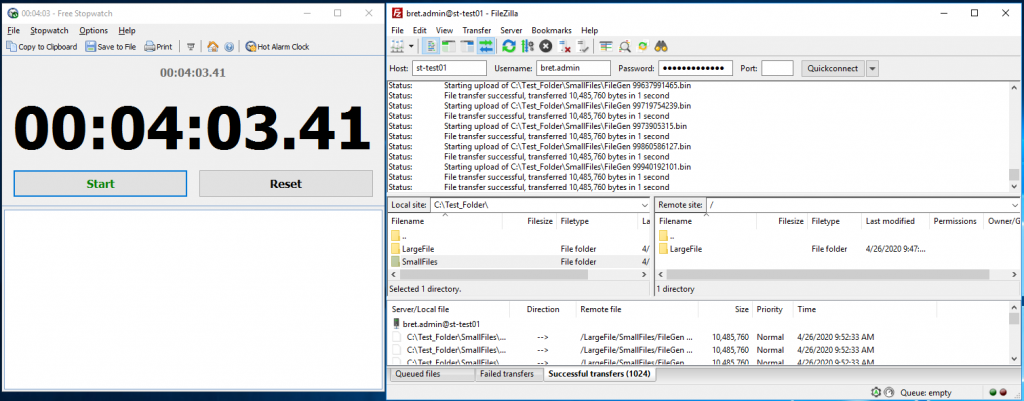

In the above image, we can see the total transfer time for one large 10 GB file was 2 minutes and 33 seconds. Now let’s test to see how long the same system takes to move 1024x 10MB files from ST-TEST01 to ST-TEST02.

Conclusion

As demonstrated, the size of the files that make up the total data to be transferred plays a big part in the transfer time. The way to avoid this is to simply create an archive in a ZIP or TAR file so the data can be moved in one chunk. The other option is to increase the number of simultaneous transfers. In Filezilla, we can run 10 simultaneous file transfers at the same time. With this configuration, the time to transfer 1024x 10MB files from ST-TEST01 to ST-TEST02 only took about 13 seconds longer

1 Pingback